LayerFlow: Layer-wise Exploration of LLM Embeddings using Uncertainty-aware Interlinked Projections

Abstract

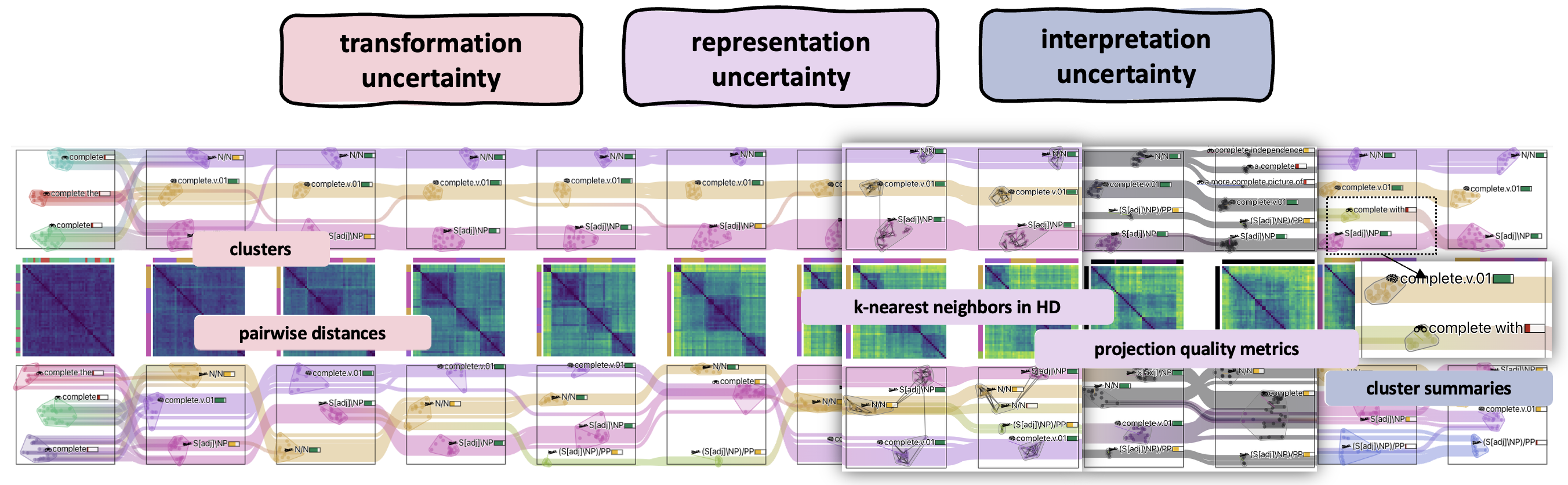

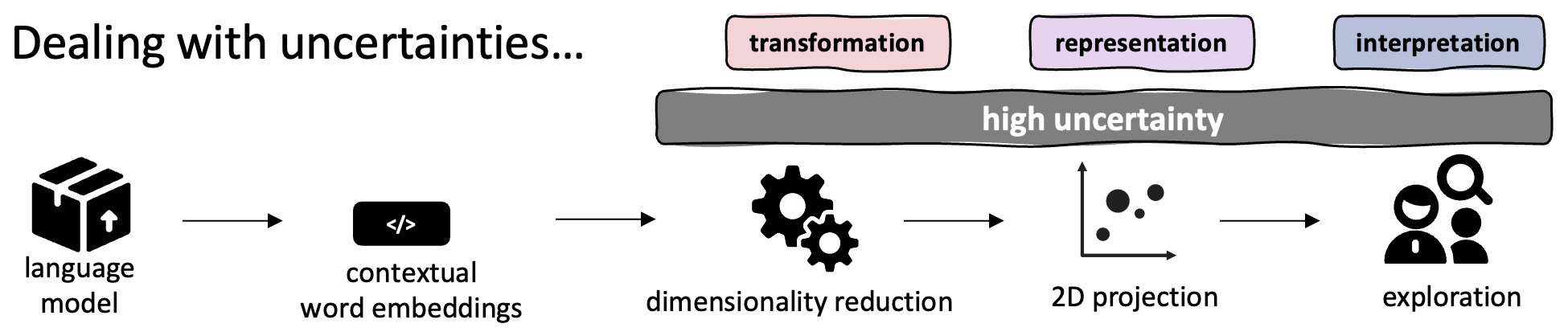

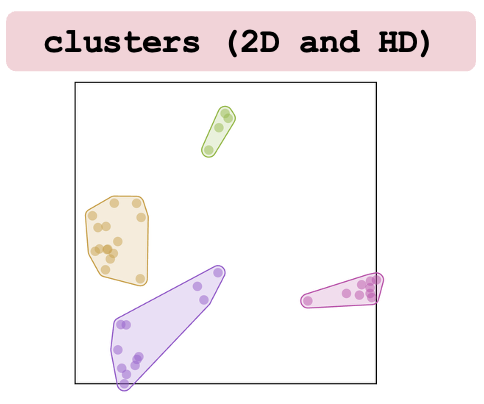

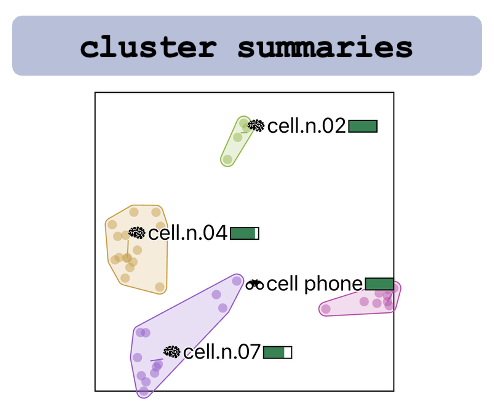

Large language models (LLMs) represent words through contextual word embeddings encoding different language properties like semantics and syntax. Understanding these properties is crucial, especially for researchers investigating language model capabilities, employing embeddings for tasks related to text similarity, or evaluating the reasons behind token importance as measured through attribution methods. Applications for embedding exploration frequently involve dimensionality reduction techniques, which reduce high-dimensional vectors to two dimensions used as coordinates in a scatterplot. This data transformation step introduces uncertainty that can be propagated to the visual representation and influence users' interpretation of the data. To communicate such uncertainties, we present LayerFlow – a visual analytics workspace that displays embeddings in an interlinked projection design and communicates the transformation, representation, and interpretation uncertainty. In particular, to hint at potential data distortions and uncertainties, the workspace includes several visual components, such as convex hulls showing 2D and HD clusters, data point pairwise distances, cluster summaries, and projection quality metrics. We show the usability of the presented workspace through replication and expert case studies that highlight the need to communicate uncertainty through multiple visual components and different data perspectives.

Motivation and Key Challenge

A key focus in embedding analysis is visually exploring similarities to identify patterns in embedding clusters. The development of such visualizations has been an active field of study since the introduction of early static word embeddings like word2vec. The current methods for embedding exploration often utilize dimensionality reduction techniques to reduce the high-dimensional (HD) vectors to two dimensions (2D) that are then used as coordinates to display words in a scatterplot.

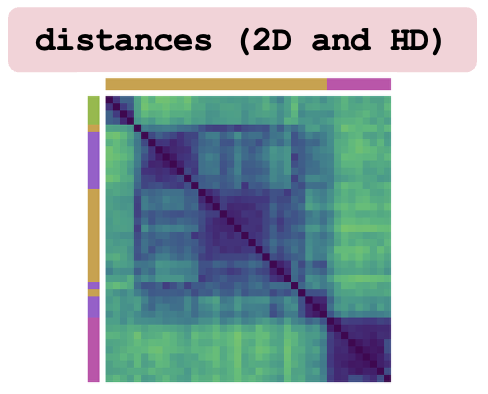

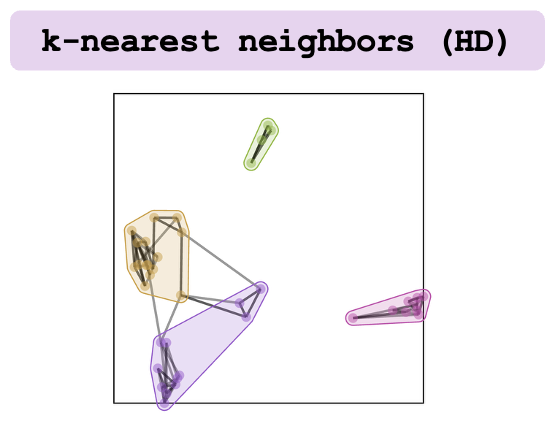

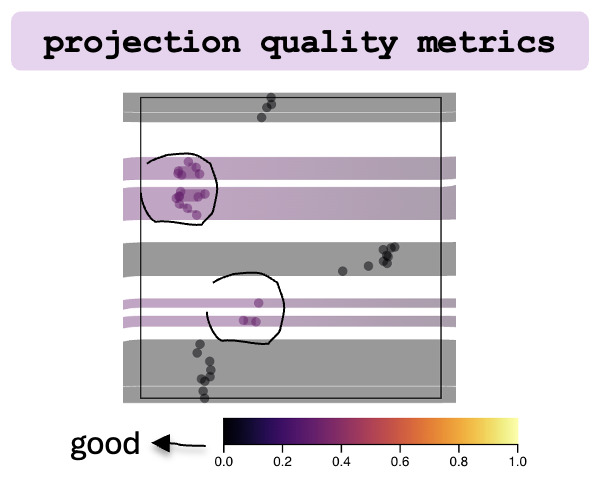

Dimensionality reduction methods have known limitations. Linear techniques like PCA struggle with non-linear structures and may distort neighborhood relationships by placing distant points close together [Ma et al. 2018]. Non-linear methods like UMAP prioritize local structure but can misrepresent global distances, leading to distortions in the reduced space. These distortions can obscure true relationships in the HD data and introduce uncertainties that affect analysis [Nonato et al. 2019]. Haghighatkhah et al. (2022) classify these uncertainties as transformation, representation, and interpretation uncertainty, showing that visualizations may not faithfully reflect the original data. Therefore, clearly communicating these uncertainties is vital, especially in tasks like word embedding analysis.

Visual Components for Uncertainty Communication

In this work, we aim to communicate the uncertainty in the embedding exploration process, addressing the uncertainty introduced by transformation, representation, and interpretation steps. Building upon our prior work that introduces an interlinked projection approach for embedding investigation [Sevastjanova et al. 2021], we present LayerFlow, an embedding exploration workspace that combines multiple visual components for uncertainty communication.

Uncertainty Type

Explanation

Supportive Visual Components

Citation

@inproceedings{sevastjanova2025layerflow,

title={LayerFlow: Layer-wise Exploration of LLM Embeddings using Uncertainty-aware Interlinked Projections},

author={Sevastjanova, Rita and Gerling, Robin and Spinner, Thilo and El-Assady, Mennatallah},

booktitle={Computer Graphics Forum},

pages={e70123},

year={2025},

organization={Wiley Online Library}

}